- Community

- :

- Connect

- :

- Product Support

- :

- Data Robot Feature Impact Ranking Interpretation

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data Robot Feature Impact Ranking Interpretation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Data Robot Feature Impact Ranking Interpretation

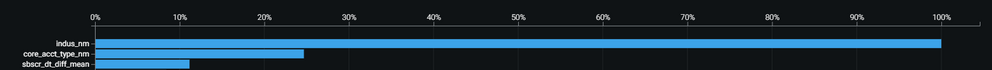

I would like to know how Data Robot interpret and calculate the percentage for the feature impact ranking as shown below:

The feature impact difference between Top 1 feature(indus_nm) – 100% and Top 2 feature (core_acct_type_nm) – 24.68% is huge where indus_nm is around 5 times higher than core_acct_type_nm.

I would like to understand more on how Data Robot interpret and comes out with the result of the feature impact ranking as shown above.

Thanks in advance for your help

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo,

That latest screenshot doesn't raise any red flags with me. Across the hundreds of projects I've built, I usually see one feature that is stronger than the others, but that there are also 5 to 12 features that add incremental value. This is a typical result in machine learning.

Are any of your features correlated? You can check via the feature association matrix. How much accuracy do you lose when you drop the top feature?

Is there a random forest algorithm in your leaderboard? This type of algorithm can spread the feature importance across more features than the boosted trees algorithms that typically dominate the top of the leaderboard. If you see this same pattern for random forest, then you know the feature impact isn't due to a greedy algorithm trying to only use a single feature.

Is there a reason why you require or expect feature impact to be spread fairly evenly across several features?

Colin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo,

Great question! All features are normalized by the same scaling factor, such that the top factor is scored at 100%. That means you can interpret the score as the relative importance of each feature versus the most important feature.

The normalization score we are using is x_norm = X / max(x)

Colin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo - Welcome to the community!

I'll give you something quick to read/watch while other SMEs get back to you.

Have you already seen these community articles?

Feature Impact—Custom Sample Size

What Features are Important to My Model?

Also, if you are already using DataRobot, make sure to look at the in-app Platform Documentation:

here (for the app/managed AI Cloud version) or here (for the AI Platform Trial version).

Hopefully this is helpful?

Linda

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Thank you for your reply. We have read through your documentation about how feature impact is calculated in Data Robot - Permutation Importance method. However, we have been encountered a situation that the feature impact ranking is highly skewed - starting from the second ranked feature, the importance has dropped dramatically compared with the top 1 feature. We have difficulty to interpret/explain the meaning of this result. From business perspective, this result might not make intuitive sense to the business. In addition, we built different models with different combinations of features, while we still see the same pattern of this skewed feature impact ranking. Therefore, we suspect there might be some unknown systematic methodology/calculation that we are not aware of. We hope you can help us out. Thank You.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo,

The interpretation is that most of the predictive p[ower is coming from that top-ranked feature. The other features aren't making as much of an impact upon model accuracy.

When I see feature impact plots like this, there are two possibilities that I list in order of how often I see them:

1) target leakage: this often happens when the dominant feature has been extracted as at the current date versus when the prediction would have been made

2) the other features just aren't predictive: this may be due to the nature of the problem, or data quality issues e.g. when there are too many missing values in the secondary features

Do you see the same pattern across a few of the models that you built, or does it vary by the type of blueprint?

Colin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi Colin,

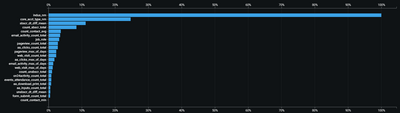

Thank you for your reply. Across the different models that we have built, we do not observed any target leakage in the models. However, we had observed the same feature impact pattern over the different models (same algorithm, same blueprint process, but different features). In different models, we are removing the top ranked feature from the previous model, yet we observed another feature raising up as the dominant 100% in the feature impact score, followed by the second ranked feature usually has a feature impact score of less than 50% compared with the top1 feature.

We are actually expecting to see a more distributed decreasing pattern in our feature impact chart, but instead we always observed a highly skewed result.

Could you provide us more information? Thank You.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo,

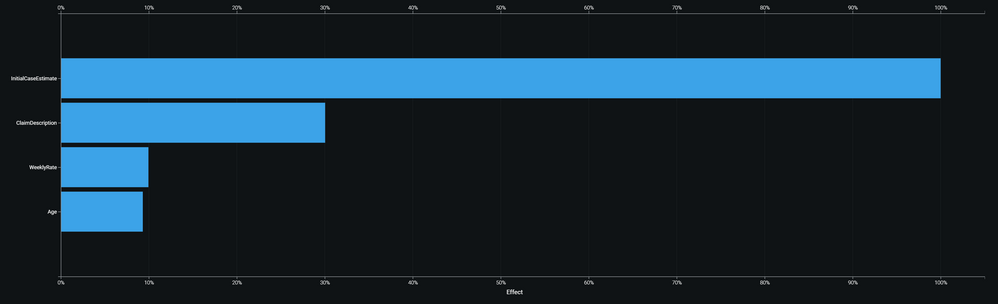

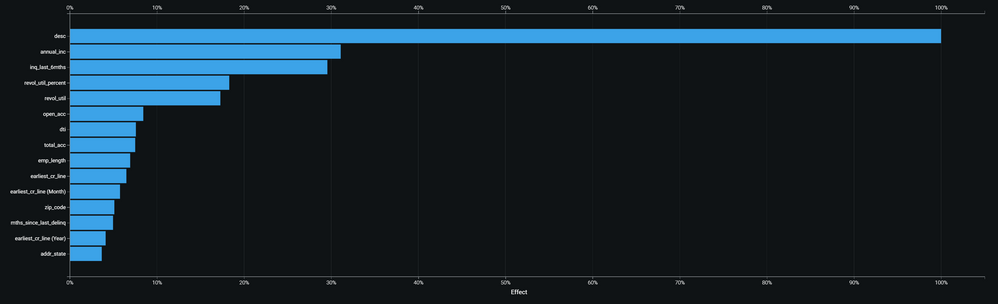

That latest screenshot doesn't raise any red flags with me. Across the hundreds of projects I've built, I usually see one feature that is stronger than the others, but that there are also 5 to 12 features that add incremental value. This is a typical result in machine learning.

Are any of your features correlated? You can check via the feature association matrix. How much accuracy do you lose when you drop the top feature?

Is there a random forest algorithm in your leaderboard? This type of algorithm can spread the feature importance across more features than the boosted trees algorithms that typically dominate the top of the leaderboard. If you see this same pattern for random forest, then you know the feature impact isn't due to a greedy algorithm trying to only use a single feature.

Is there a reason why you require or expect feature impact to be spread fairly evenly across several features?

Colin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Here are a couple of examples of feature impact from projects that I have built. They don't look too different from yours.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo,

I can second Colin's view that your Feature Impact plot does not raise any red flags. In several projects with clients we see even more skewed graphs for models that are still highly accurate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi Colin,

Thank you for your reply. We would like to clarify for the statements below:

It was stated in the DataRobot documentation that the feature impact has been normalized.

"Feature Impact shows, at a high level, which features are driving model decisions. By default, features are sorted from the most to the least important. Accuracy of the top most important model is always normalized to 1."

We would like to clarify on the last sentence, does that means ONLY the score of the top feature had been normalized to 1 or all the feature scores had undergo normalization as well?

If its the latter, we would like to further clarifies if the normalization is using the normalization formula because if we follow the normalization formula, we would see the feature with min score will have a normalized score of 0.

The normalization formula I mean here is: x_norm = ( X - min(x)) / (max(x) - min(x))

Please help us clarify on this part. Thank you so much for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi hchewleo,

Great question! All features are normalized by the same scaling factor, such that the top factor is scored at 100%. That means you can interpret the score as the relative importance of each feature versus the most important feature.

The normalization score we are using is x_norm = X / max(x)

Colin