- Community

- :

- Connect

- :

- Product Support

- :

- Difference in confusion matrix

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Difference in confusion matrix

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Difference in confusion matrix

Hello,

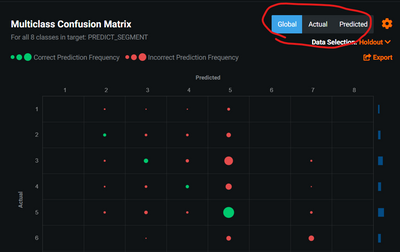

I was wondering what the difference in the options between 'global', 'actual and 'predicted' mean in the confusion matrix?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

hey @oscarjung

Thanks for posting your question and welcome to the community!

In Short

- Global looks at absolute accuracy

- Actual looks at Recall

- Predicted looks at Precision

Lets use this dataset as an example. The targets are A, B and C for simplicity in that order down and across the matrix:

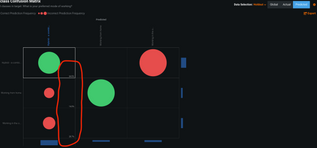

Lets start with the Actuals tab

In the first cell, the score is 98% because 50 out of the 51 of the actual A class, we predicted correctly. DataRobot missed 1 record and predicted it as Category C, hence 2%. Summing up the Category A row gives you 100%. You can see on the image above, there's a very small display of the % breakdown. You can export the CSV file to look at it in more detail, by clicking on that "Export" button like i've circled on the image

The next tab is predicted but this time we look column wise where each category that DataRobot predicted, it sums up to 100%. This allows us to see when DataRobot makes the prediction for Category A for example, how many did it hit on Category A, and how many did it misclassified on Category B. If see see the screen shot below, 65% of the prediction that DataRObot made is on Category A which it go right. But 14% of the prediction is actually Category B and 21% is Category C which is incorrectly predicted. Of the total predictions made on Category A, only 65% is correct

Hope that helps!

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi @oscarjung, thanks for the question.

I think EuJin has given a great explanation but if you would like to reference the DataRobot documentation here's a link to it:

https://app.datarobot.com/docs/modeling/analyze-models/evaluate/multiclass.html

Hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi @oscarjung ,

I see @desmond_lim responded with a link to the Platform Documentation (in-app).

For the same link to the Platform Documentation (public), please take a look here:

https://docs.datarobot.com/en/docs/modeling/analyze-models/evaluate/multiclass.html

Hope this helps!

Sincerely,

Alex Shoop