- Community

- :

- Connect

- :

- Product Support

- :

- Re: Do custom inference models capabilities differ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Do custom inference models capabilities differ from vanilla

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Do custom inference models capabilities differ from vanilla

Hi, I have been looking at custom model deployments and wanted to know if their capabilities in MLOps differ from standard deployments using DataRobot's inbuilt models. Specifically can custom models use continuous AI features such as automatic retraining?

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

I just checked it out. Running fit, python Sklearn for training models, inside of DataRobot is currently in alpha at this moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi Ira, I am from former Algorithmia. We got acquired about 2 Month ago by DataRobot. The custom inference capability brought by Algorithmia differ quite a bit from the vanilla offering.

ModelCatalog, Versioning, Pipelining, Governance, Languages, Dependencies, Compute, Orchestration, Hardware, Monitoring are features provided across models built in R, Python, Ruby, Rust...DataRobot, H2O, Dataiku, AWS SM...etc using autoscaling capabilities running Kubernetes on Dockers, deployed on premise or in the Cloud.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

From my understanding, DataRobot will not be able to use continuous AI automatic retraining, because one assumes that these models are trained models monitored by MLOps. For instance, the python models are pickled (serialized) before being put in out MLOps and monitored. I cannot see how automatic retrain will work. However, you can change the model with another model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

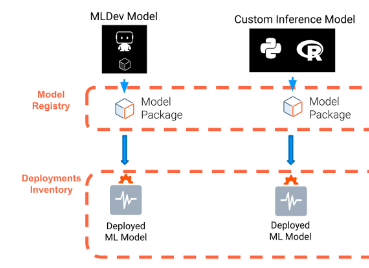

Thanks @dalilaB, Both MLDev and custom Inference models are trained models before being monitored by MLOps, I assume then what is passed to the model registry just contains model information (without the code used for training that model ect)?

I assume it could be done with a parameter of some kind allowing DR to pass training data to the model, if the model registry had that ability.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

One solution is to register the model in our model registry and then you can have it retrained, which is what is drawn in the image above. Still, I will reach out to our MLOps experts and get back to you on Monday.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

I just checked it out. Running fit, python Sklearn for training models, inside of DataRobot is currently in alpha at this moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Thanks so I assume its final functionality hasn't been settled on?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

yes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Great I only ask because in the docs it says it can be enabled and wanted to know its current state.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hey @IraWatt I'm sure you've looked here already but just in case: https://community.datarobot.com/t5/blog/what-s-new-in-datarobot-release-7-2/ba-p/12469. See the Continuous AI (public preview) info/demo video.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hey, thanks I had not seen the demo!