- Community

- :

- Connect

- :

- Product Support

- :

- FDW size in an AutoTS project

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

FDW size in an AutoTS project

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

FDW size in an AutoTS project

I've had the oppurtunity to play around with the Feature Derivation Window (FDW) size in one of DataRobot's AutoTS sales forecasting use cases. However, I'm not very sure what determines it's maximum size and why.

Due to some irregularities in the missing dates inside the data, D.R thought it'd better to aggregate features using a row-based approach (rather than a time-based one). So setting a FDW window of lets say 100 rows seemed to be reasonable. Setting a FDW of about 350, however, was not due to there not being "enough rows of data for training" (?).

Just to put things in context, the data comprised of about 24 departments (i.e. multi-series), each having about 2.5 years worth of data (by day) along with the irregulaties aforementioned. (i.e. Not every department has exactly 2.5 years, by day, worth of data.

So my question here is, why is choosing a feature derivation window of, say, 350, decreasing the amount data to train with? Isn't the purpose of engineering features to add more potential signals (columns) to existing data rather than take away from it?

And just to make things, a bit more confusing, after setting the FDW size, clicking the start button, and allowing D.R to generate the Time-Aware features, the # of rows in the resulting dataset is significantly bigger than the original.

Any clarification or resource would be of much help.

Thank you.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

I agree it is a very fair point that the original features are potentially wasted. To include all the data lost in the FDW the engineered features would need to be set to its columns median/mean as models as a rule cant accept NULLs. This approach would introduce noise especially so with a high FDW. With a low FDW the benefits would be a couple more rows.

Would be good to hear from anyone in the community who has experimented with this approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

To calculate how much training data you have:

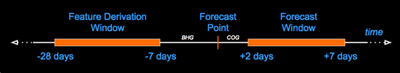

Available Training Data = Total Records - (FDW + BHG + COG + FW + Holdout)

Although if you have multiple backtests the amount of available data can be further reduced (Community Explanation).

FDW reduces the amount of training data because you cant train a model on features that haven't been generated yet. For instance, if the FDW is set between [-28 to 0], data within the the first 27 days would not have access to all the features generated by FDW and therefore cant be trained on.

Setting a high FDW is a trade off between more features and less training data. DataRobot University has a great course on the correct use of the FDW in conjunction with the other time series features here. Overall, DataRobot provides a good starting point for the FDW. For use cases where accuracy is paramount, building out separate projects with different FDWs is a recommended step.

Your final point about the number of rows being higher, I'm not sure why that would be does it say the number of 'Datapoints' have increased?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Alright so, I completely agree with your assesment that the 27 days would not have access to the features generated by the FDW. But what happens to the original features?

Even moreso, lets say you picked a FDW of 60 days. According to your statement, the first 60 days would be void of engineered data. But even then, D.R creates multiple smaller FDWs, taking into account your set limit of 60. So for example, it would also take a derivation window of 30, 10, 7 et cetera...

That being said, wouldn't some days, out of the 60 days, have some engineered data to work with?

I can understand there being a few, relatively, rows where all engineered features might turn out to be nulls, but even then, you still have some egineered features created by smaller FDWs which are non-nulls. And even moreso, you still have the original features.

TL;DR: Even though you pick a FDW of 60 days, D.R will still pick smaller versions of the FDW, keeping in mind your decision of 60 days. This would still lead to some of the 60 days having some engineered data (along with the rest of them with nulls ofcourse) to work with. So why exclude them?

As for my final point, it was my mistake. The overall data count does not increase. I misinterpreted.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

I agree it is a very fair point that the original features are potentially wasted. To include all the data lost in the FDW the engineered features would need to be set to its columns median/mean as models as a rule cant accept NULLs. This approach would introduce noise especially so with a high FDW. With a low FDW the benefits would be a couple more rows.

Would be good to hear from anyone in the community who has experimented with this approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Interesting!

So rather than keeping those nulls and imputing them with medians, or otherwise, they drop it. This, however, seems to also hold true for smaller FDW's.

They still seem to prefer to drop these rows, which is probably a more conservative approach as time-series data can be pretty sensitive and whose best practices can vary wildly from case to case.

Yes i'd love to hear from anyone in the community too whose ventured with this approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Great discussion, @DREnthusiast and @IraWatt!

@IraWatt is correct about how the number of training rows are determined and that the number of backtests also impacts that calculation. The reason why the first n(FDW) rows are dropped is that including them as missing or anything else would introduce bias into the training data. Whatever the value that is used to represent the missing value still is a function of the fact that no value previously existed for those rows. This is entirely an artifact of the way in which the data were constructed and not of the underlying phenomenon we are trying to model. @IraWatt is correct that including them "would introduce noise". That noise is the signal from the data construction, not from anything we are actually interested in predicting.

The only generic case for which dropping the earliest rows might actually introduce unwanted bias is when you believe that those earliest rows represent something specific about your series of interest that is not also represented within later rows. Generally speaking, this is a behavior of interest in your time series, which is usually observable in a time plot of your target. If that is the case, you should either bring in additional data from earlier in your series, prior to the behavior of interest, or shorten your FDW. In either case, you need to ensure that your new FDW doesn't exclude the period of interest from training. Note that this still is not a good argument for just including those rows (say, if you lack earlier data). Otherwise, the signal from the imputed values should not be expected to be predictive, as you likely won't be imputing them again, later in the series. In the case where you lack earlier data, I recommend just shortening your FDW to include as much training data as possible. Other strategies are possible but they depend on the specifics of your use case.

I hope this is helpful!