- Community

- :

- Connect

- :

- Best Practices & Use Cases

- :

- Re: Feature Selection and KMeans Clustering

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Feature Selection and KMeans Clustering

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Feature Selection and KMeans Clustering

Hello,

I wanted to know whether there are ways to help with feature selection for an unsupervised learning model apart from feature impact computed after model is run (PCA perhaps?). My data has over 200 features and DR only removes around 100 of them in its informative feature list.

When I am running my K-Means model I am obtaining highly imbalanced clusters (99% and 1%). How can I improve this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi Sanika!

As it comes for clustering, it is unsupervised learning, so it is impossible to know what features are to remove. Some of our blueprints might use PCA as a step indeed to understand how to cluster your data, and result of it you might see in feature impact as a result.

What I might recommend you is increasing number of clusters to split for, to check what are balances there.

Informative features are filtering out data that is probably has no effect on result, and does this before creating the project. Maybe your data has a lot of missing data noise, you may try to go to data tab, sort out data that is too noisy and create new feature list there. Or create feature list out of feature impact leaderboard.

Please let me know if you have more specific needs with your data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

I highly recommend a visual inspection of the key variables impact on clusters from unsupervised learning. But I also highly recommend to transform raw data into a statistical form that really uses orthogonality as your best friend. Rather than 200 raw variables, think about submitting them to PCA or Canonical Correlations and use the resulting dimension scores as input to the KMeans Clustering. Then use iterative procedures to weed out the variables that do not lend itself to interpretability.

I will be happy to go through this in detail with you, or anyone.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hi, zsfeinstein!

Thanks for your suggestion. But it is not really needed to do PCA on your end as DataRobot already does it for you and much more.

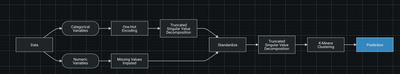

Check out one example of our clustering Blueprints, you can find SVD block there, which is most common algorithm to do PCA, along with many data preprocessing steps one will need to create on their own.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Hello,

I noticed it in the blueprint, thanks for clarifying that. I have a couple other doubts about the blueprint for K-Means clustering. Is there no documentation/way to find out what methods were used for the different steps? For instance, how were the missing values imputed or was standard scalar used to standardize the data, how exactly did truncated SVD contribute to the model, etcetera.

Also, in case of validating cluster stability, I did not find the Prediction feature useful as it gives me a .csv output with probability of each entity being in different clusters. Is there any way where I can get cluster split in % for the test data like how it was obtained for the train data?

Another thing I wanted to know about is how DataRobot handles highly correlated features in case of unsupervised learning (as it may lead to overfitting?). I can see that the Informative Features shortlisted from my dataset still have a lot of highly correlated features present in them (+1.00).