- Community

- :

- Connect

- :

- Product Support

- :

- MIssing Value Imputation understanding

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

MIssing Value Imputation understanding

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

MIssing Value Imputation understanding

For some blueprints, numeric variables have ‘Missing Values Imputed’. The documentation says mean/median/random value is imputed, and an indicator variable is created (row-based binary missing value flag).

But we don't see these features in the variable importance plot (also after downloading as csv and checking the full list).

Why are they not included there? How can we evaluate the importance of having these flag variables in our model, if they are not included there?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

@max_roman , good question.

A few quick things on missing value imputation:

- different blueprints will handle this in different ways. Linear/coefficient blueprints will use the median imputation you mention, while tree-based blueprints impute an arbitrary nonsense-value far outside of the data distribution.

- 'Missing indicator' columns/features are created but not made visible directly as their impact/effect are grouped into the 'core' feature on which the 'missing indicator' column was created.

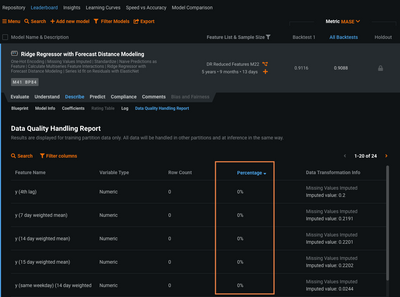

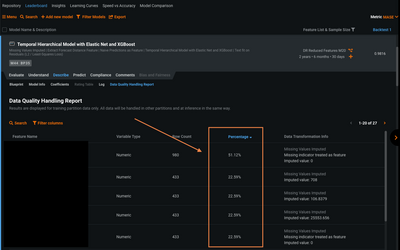

- You can see which features have had missing treatment, and get info in the 'Describe -> Data Quality Handling Report' on the BP on the leaderboard.

These insights may or may not be particularly informative. In the above case, we see that there weren't any missing values. In the case below, we see quite the opposite: 20-50% of values are missing for many features.

Notice the feature indicated by the orange arrow. It has ~51% missing values, and did end up being used in the model. But you see that it has quite a small impact, and is likely not as relevant because it contained so many missing values (and lost otherwise-relevant signal).

This model was built with this feature in the context in which it is missing ~51% of the values in training data, and so our understanding of its importance to the model is related to that. If future data (or updated training data to find and fill those missing values) did not have as many values missing, then the impact of that feature to the model might be different, and it could have a larger Feature Impact score. This highlights the importance of data-drift tracking (training vs future predictions), and an indication that you should retrain your model if you saw newer data with fewer missing values (as an example).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

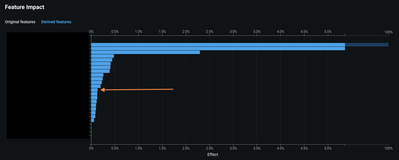

(Forgot to include this last figure)

Here we see the feature impact of that feature with ~51% missing values. It is included, but not very important (perhaps in part because so many values were missing):