- Community

- :

- Connect

- :

- Product Support

- :

- Python API Scoring Data in AI Catalog

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Python API Scoring Data in AI Catalog

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Python API Scoring Data in AI Catalog

I am trying to use the Python API to generate predictions on a project and pull those predictions into Python. The project has three secondary datasets that are all located in the AI Catalog. When the project was created, the secondary datasets went through the automated feature engineering. How do I send the new datasets to the desired model in the project to generate the predictions using the Python API?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Here is the answer from my colleague :

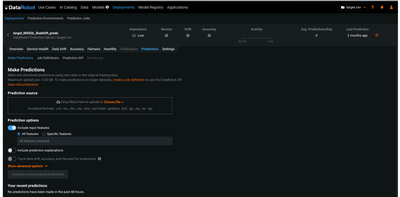

Once you have your model deployed, predictions can be made from Predictions tab, scoring dataset only needs to be uploaded either from AI Catalog or from Local file.

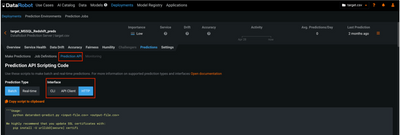

Another way to make predictions is to use CLI, API Client or HTTP, all those options can be found on Prediction API tab.

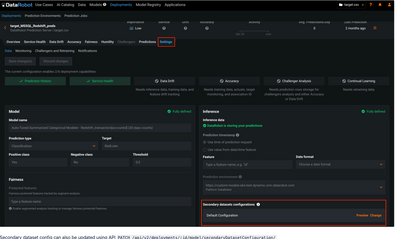

Secondary datasets configuration can be changed on the Settings tab.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

I need to be able to kick off the scoring and retrieve the predictions from Python without deploying the individual model. Is there a way to do this without moving the model into deployment?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

This should be doable by leveraging the secondary_datasets_config_id argument in the upload_dataset function and then subsequently requesting predictions on this dataset object.

Best,

Taylor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Okay so where do I find the secondary_datasets_config_id in the platform for the project I'm working on?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Apologies for the delay!

You can list them for your project by using dr.SecondaryDatasetConfigurations.list(<insert project ID>). Documentation for this is here.

Best,

Taylor