- Community

- :

- Connect

- :

- Product Support

- :

- Why is Validation, Cross-validation and Holdout NA

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why is Validation, Cross-validation and Holdout NA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Why is Validation, Cross-validation and Holdout NA

Question:

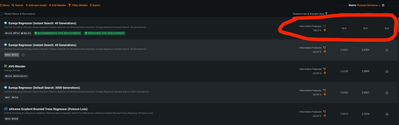

Here's another one I get a lot from customers. In the leaderboard, the top performing model blueprint and recommended by DataRobot, is NA on the evaluation metric across Validation, Cross-Validation and Holdout fold. Why is this happening? Here's an example screen shot:

Answer:

DataRobot has refit the Blueprint model on 100% of the data. Because of this, there's no way to validate the accuracy (can't validate on a sample that the model has trained on). So it's NA across everything.

We do know that this is based off the winning Blueprint (BP33 in the screenshot below) that was trained on ~64% of the data. You can see there's also a little snow flake sign that indicates the model parameters have been frozen from the same Blueprint at a lower sample size. Basically we don't retrain the model's parameters.

But why do we do this?

We do this because refitting the model on the full dataset does improve the accuracy slightly. But you don't want to retune the parameters as it could overfit. To properly test the accuracy after the refit to 100%, you can bring in an external test set into DataRobot to verify the accuracy results

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Love this @Eu Jin . Succinct and great info!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Love this @Eu Jin . Succinct and great info!