- Community

- :

- Learn

- :

- Tips and tricks

- :

- Visual AI model tuning: A quick guide

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Visual AI model tuning: A quick guide

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Visual AI model tuning: A quick guide

Intro

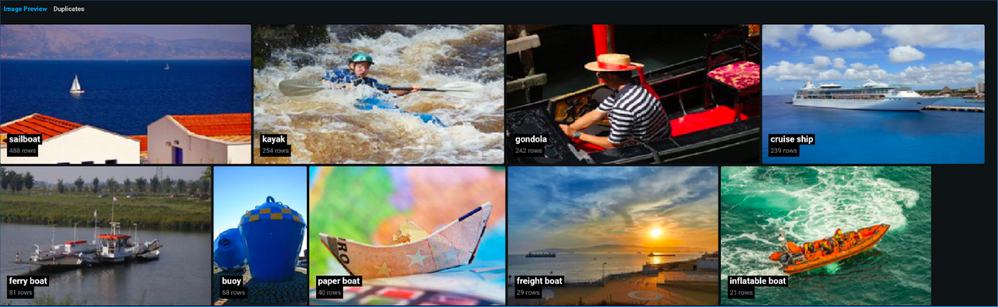

For this guide, we are going to step through several recommended methods for maximizing Visual AI classification accuracy with a boat dataset containing 9 classes and approximately 1,500 images.

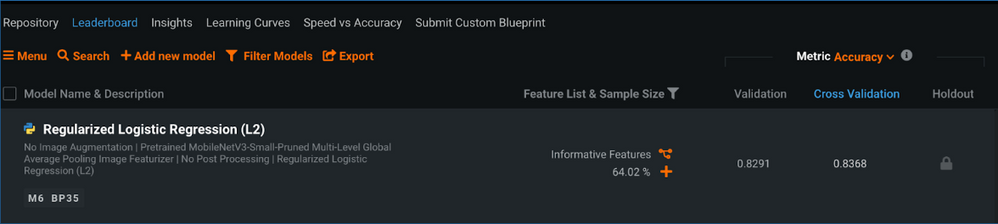

The default training process consists of uploading a dataset, selecting the target to predict, and hitting Start.

This results in a top model achieving 83.68% accuracy with the cross validation metric. Note: LogLoss is our optimization metric and accuracy used here for better interpretability.

There are many steps we can explore for improving these modeling results.

1. Run with comprehensive mode

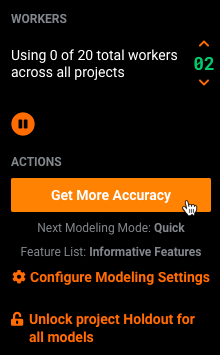

Our first modeling run quickly generated results by exploring a limited set of available blueprints, however, many more are available with comprehensive mode. The Modeling Mode Comprehensive can be set during project setup,

or after initial modeling is complete via the WORKERS section shown on the Leaderboard. Select the Get More Accuracy option to re-run the modeling process with new settings and to configure those settings before re-running, select Configure Modeling Settings.

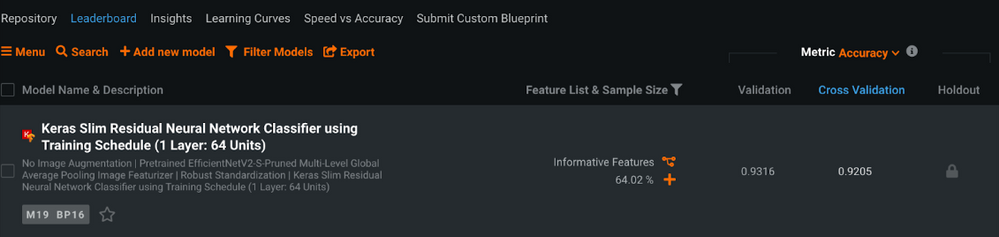

This resulted in a model with a much higher accuracy of 92.05%

2. Explore other image featurizers

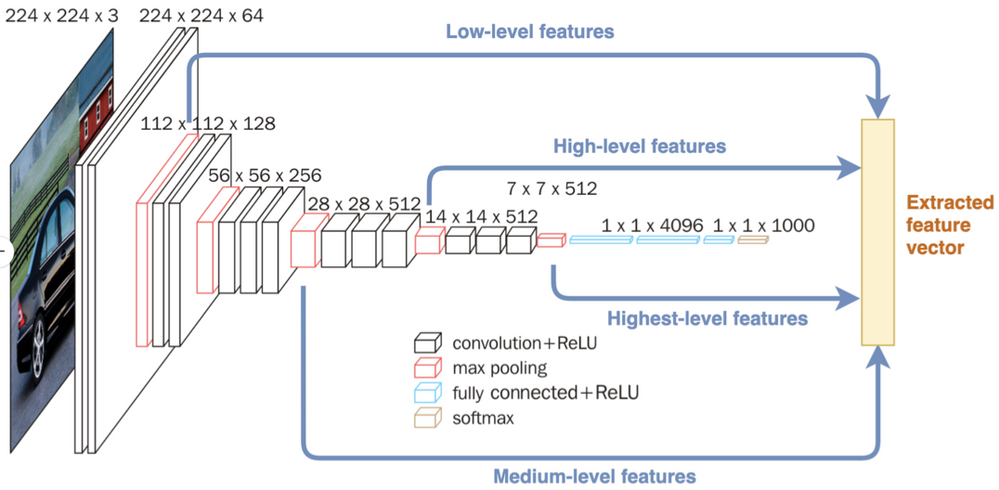

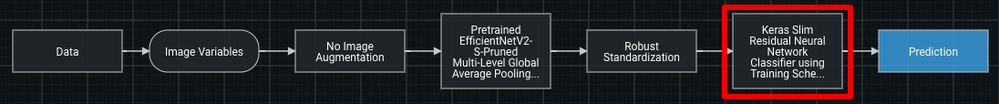

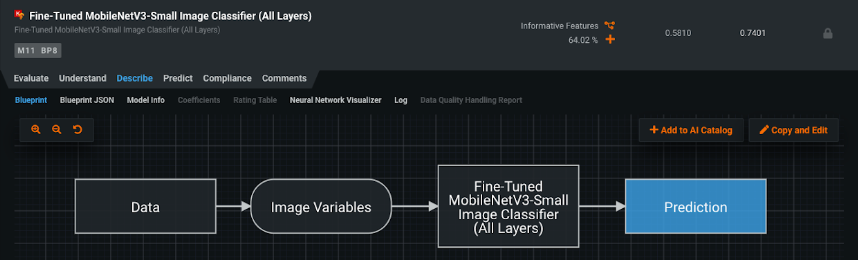

Once images are turned to numbers (“featurized”) as a task in the model's blueprint, they can be passed to a modeling algorithm and combined with other features (numeric, categorical, text, etc.). The featurizer takes the binary content of image files as input and produces a feature vector that represents key characteristics of that image at different levels of complexity. These feature vectors are then used downstream as input to a modeler. DataRobot provides several featurizers based on pretrained neural network architectures.

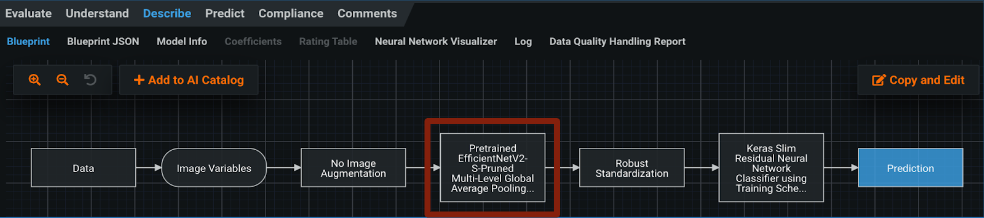

To explore improvements with other image featurizers, select the top model on the Leaderboard. The model blueprint shows which featurizer was used.

Select Evaluate -> Advanced Tuning and scroll to network.

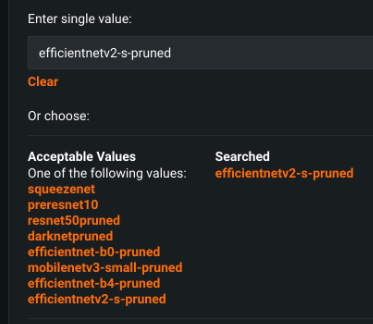

Selecting the currently used network brings up the menu of options.

When a hyperparameter has been updated, scroll to the bottom and select Begin Tuning.

After tuning the top model from comprehensive mode with each available image featurizer, we can further explore variations of those featurizers in the top performing models.

3. Feature Granularity

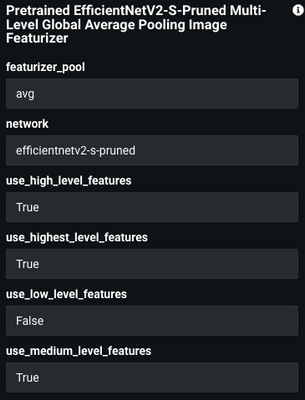

Featurizers are deep convolutional neural networks made up of sequential layers, each layer aggregating information from previous layers. The first layers capture low level patterns made of a few pixels: points, edges, corners. The next layers capture shapes and textures; final layers capture objects. You can select the level of features you want to extract from the neural network model, tuning and optimizing results (although the more layers enabled the longer the run time).

Toggles for feature granularity options (highest, high, medium, low) are found below the network section in the Advanced Tuning menu.

Any combination of these can be used, and context of your problem/data can direct which features might provide the most useful information.

4. Image Augmentation

Following featurizer tuning, we can explore changes to our input data with image augmentation to improve model accuracy. By creating new images for training by randomly transforming existing images, you can build insightful projects with datasets that might otherwise be too small.

Image augmentation is available at project setup in Show advanced options -> Image Augmentation or after modeling in Advanced Tuning.

Domain expertise here may also provide insight into which transformations could show the greatest impact. Otherwise, a good place to start is with rotation, then rotation + cutout, followed by other combinations.

5. Classifier Hyperparameters

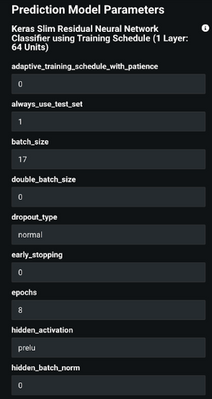

The training hyperparameters of the classifier, the component receiving the image feature encodings from the featurizer, are also exposed for tuning within the Advanced Tuning menu.

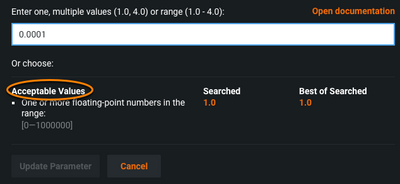

To set a new hyperparameter, enter a new value in the Enter value field in one of the following ways:

- Select one of the pre-populated values (clicking any value listed in orange enters it into the value field.)

- Type a value into the field. Refer to the Acceptable Values field, which lists either constraints for numeric inputs or predefined allowed values for categorical inputs (“selects”). To enter a specific numeric, type a value or range meeting the criteria of Acceptable Values:

In the screenshot above you can enter various values between 0.00001 and 1, for example:

- 0.2 to select an individual value.

- 0.2, 0.4, 0.6 to list values that fall within the range; use commas to separate a list.

- 0.2-0.6 to specify the range and let DataRobot select intervals between the high and low values; use hyphen notation to specify a range.

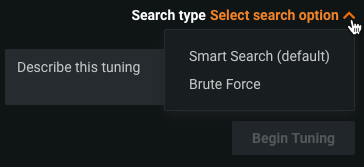

The search type can be set by clicking on Select search option and choosing either:

Smart Search (default) performs a sophisticated pattern search (optimization) that emphasizes areas where the model is likely to do well and skips hyperparameter points that are less relevant to the model.

Brute Force evaluates each data point, which can be more time and resource intensive.

Recommended hyperparameters to search first vary by the classifier used.

- Keras model: batch size, learning rate, hidden layers, and initialization

- XGBoost: number of variables, learning rate, number of trees and subsample per tree

- ENet: alpha and lambda

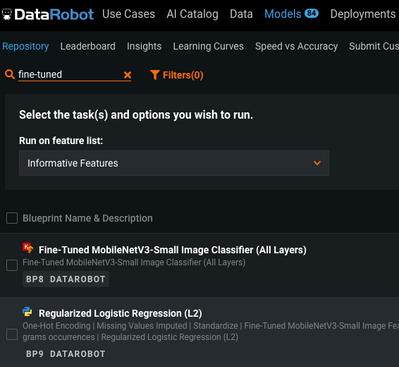

Bonus: Fine-Tuned Blueprints

Fine-Tuned model blueprints may prove useful for datasets that greatly differ from ImageNet, the dataset leveraged for the pretrained image featurizers. These blueprints are found in the model repository and can be trained starting from scratch (random weight initialization) or with the pretrained weights. Many of the tuning steps described above also apply for these blueprints but note, however, the nature of fine-tuned blueprints requires extended training times.

For the majority of cases, the pretrained blueprints will achieve better scores and did so with our boats dataset.

Final Results

Starting with an initial top model from the Quick modeling mode with an 83.68% accuracy, we then ran with Comprehensive mode and produced a model with 92.05% accuracy. Following the additional steps outlined here for maximizing model performance with the most effective knobs within the platform, we found out a bit more performance with a final accuracy of 92.92%.