- Community

- :

- AI Accelerators

- :

- AI Accelerators

- :

- Mastering many tables in production ML: complete w...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Mastering many tables in production ML: complete workflow

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Mastering many tables in production ML: complete workflow

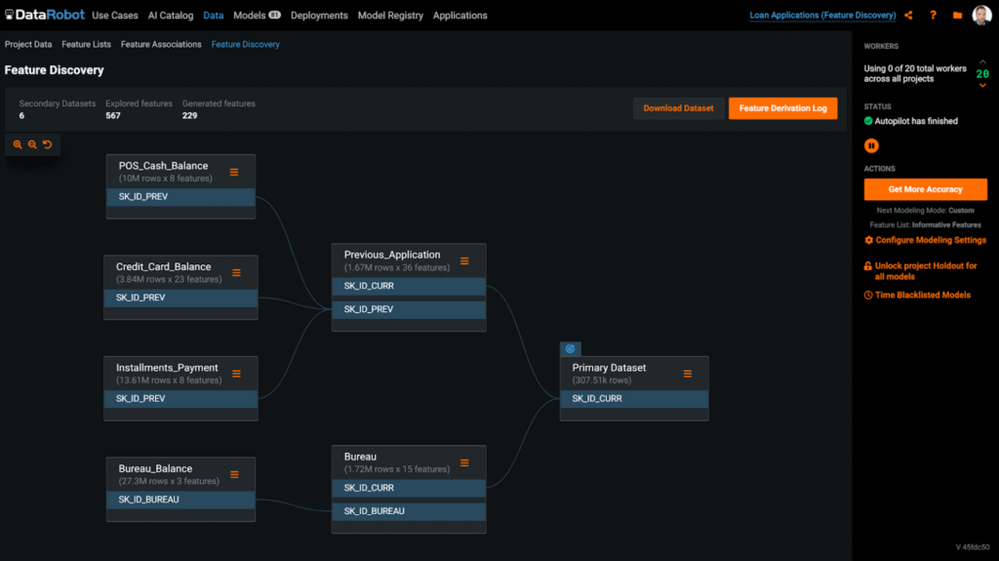

We've all been there: data for customer transactions are in one table, but the customer membership history is in another. Or, you have sensor-level data at the sub-second level in one table, machine errors in another table, and production demand in yet another table, all at different time frequencies. Electronic Medical Records (EMRs) are another common instance of this challenge. You have a use case for your business you want to explore, so you build a v0 dataset and use simple aggregations from before, perhaps in a feature store. But moving past v0 is hard.

The reality is, the hypothesis space of relevant features explodes when considering multiple data sources with multiple data types in them. By dynamically exploring the feature space across tables, you minimize the risk of missing signal by feature omission and further reduce the burden of a priori knowledge of all possible relevant features.

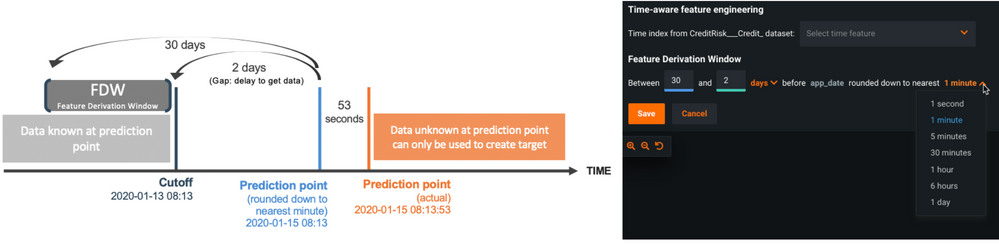

Event-based data is present in every vertical and is becoming more ubiquitous across industries. Building the right features can drastically improve performance. However, understanding which joins and time horizons are best suited to your data is challenging, and also time-consuming and error-prone to explore.

In this accelerator, you'll find a repeatable framework for a production pipeline from multiple tables. This code uses Snowflake as a data source, but it can be extended to any supported database. Specifically, the accelerator provides a template to:

- Build time-aware features across multiple historical time-windows and datasets using DataRobot and multiple tables in Snowflake (or any database).

- Build and evaluate multiple feature engineering approaches and algorithms for all data types.

- Extract insights and identify the best feature engineering and modeling pipeline.

- Test predictions locally.

- Deploy the best-performing model and all data preprocessing/feature engineering in a Docker container, and expose a REST API.

- Score from Snowflake and write predictions back to Snowflake.

- https://docs.datarobot.com/en/docs/api/api-quickstart/index.html