- Community

- :

- Learn

- :

- Tips and tricks

- :

- Re: Tips for creating uncorrelated feature lists

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Tips for creating uncorrelated feature lists

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

Tips for creating uncorrelated feature lists

Let’s assume that you are interested in creating an uncorrelated feature list from which you can build final models, for example, by running DataRobot’s autopilot modeling process. Essentially the goal is to reduce the feature set which are as uncorrelated as possible, while ensuring there is limited degradation in the performance metrics.

You can easily do that in DataRobot by following these steps:

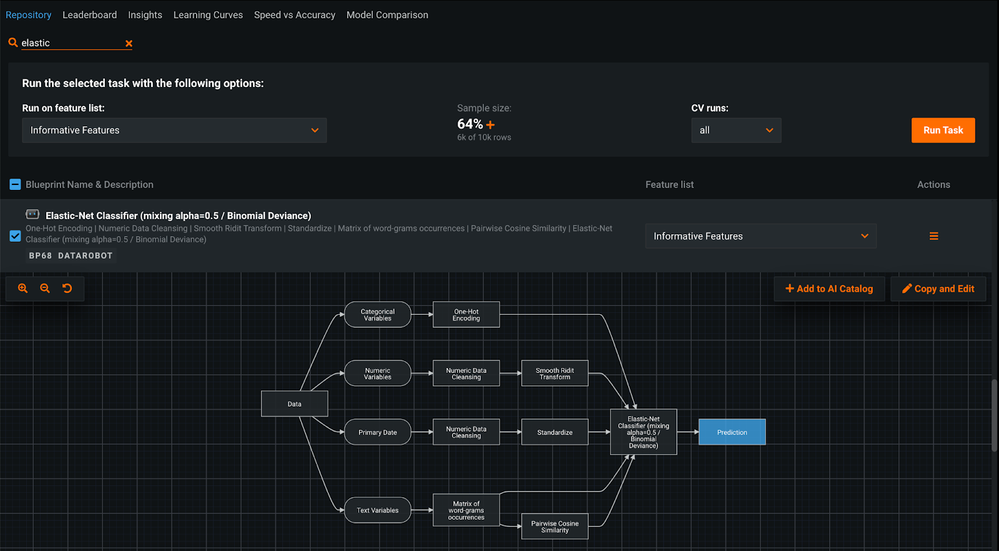

Step1. Find an elastic net model in the Repository, either before or after kicking-off the Autopilot modeling process for the first time

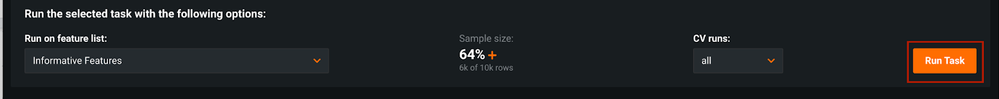

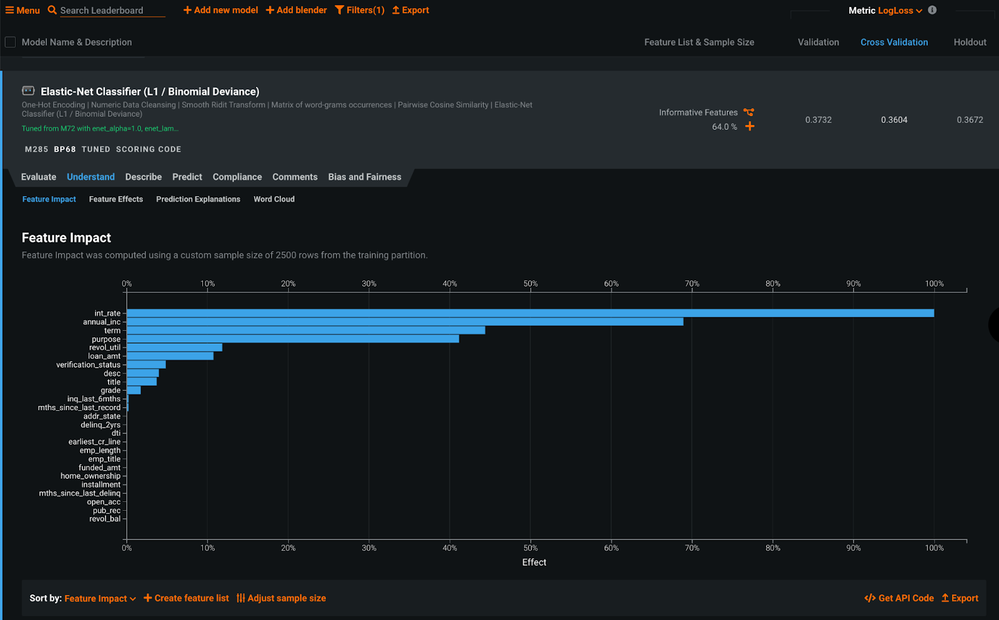

Step 2. Run this elastic model on the Informative Feature feature list (with all the features), 64% sample size and All CV run

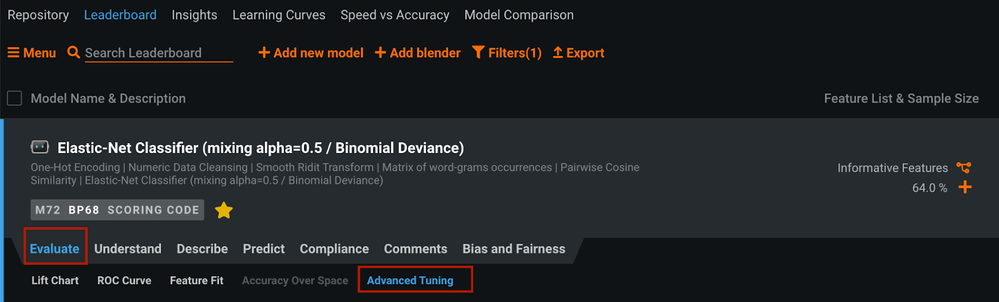

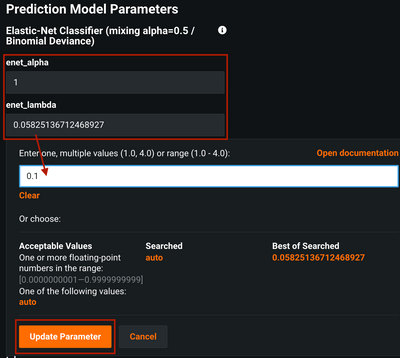

Step 3. Apply Advanced Tuning to the elastic model, so that alpha parameter is equal to 1, which is the lasso penalty. Tune the lambda parameter as well and set a high value - e.g., if the current parameter value is lambda=0.01, try lambda=0.1

Step 4. Run the Feature Impact on this tuned model

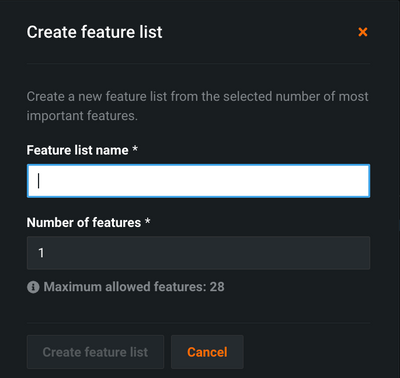

Step 5. Use the option to create a new Feature List (Top N features) from the Feature Impact - where N is the number of top variables that you would like to use. The lasso penalty has dropped the correlated features, so you can tune the lambda parameter value (see step 4) until you have dropped enough correlated features that you are happy with the result

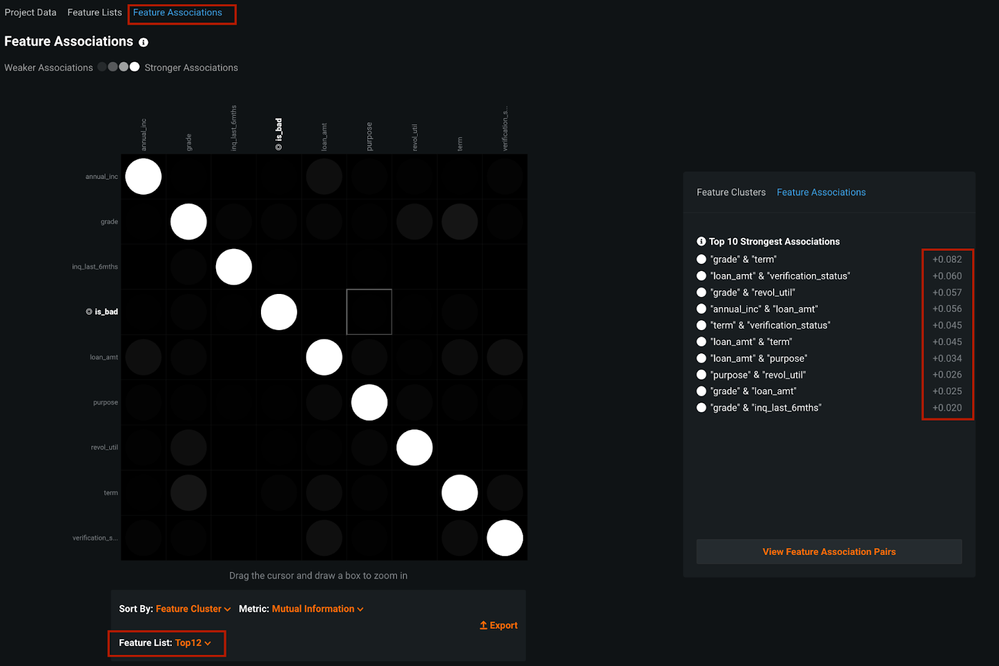

Step 6. Use Feature Associations feature to review the correlation between the variables of the new “Top N” feature list that you have created

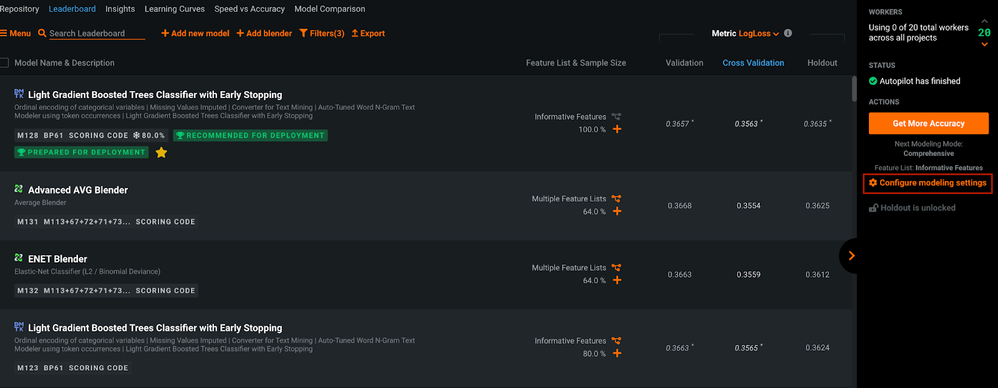

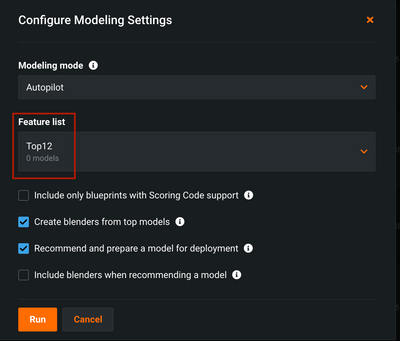

Step 7. Finally use this new feature list to train other models without correlation, either by re-running the Autopilot modeling process or retraining an specific existing model from the Leaderboard

Let me know if you have further questions about this tip!

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

This is creative and brilliant. One extra tip I can suggest -

All of this is also possible using DataRobot Python Client via code. If you are interested to automate and scale this to many projects. It's all feasible with Python API client

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Printer Friendly Page

- Report Inappropriate Content

This is creative and brilliant. One extra tip I can suggest -

All of this is also possible using DataRobot Python Client via code. If you are interested to automate and scale this to many projects. It's all feasible with Python API client